I’m on a quest to find profitable micro SaaS ideas. Validation is hard and messy and while I haven’t hit gold yet, I’m reflecting and improving on the process to increase my velocity with this project. To that end, let me share a recent journey of validating a SaaS idea in the product analytics space.

Ideas on paper

It all started with an idea. I sat down for some grueling minutes and just punched out a few micro SaaS ideas that came to mind. As with the good part of Instagram, there is no filter at this point.

With a list of roughly twenty ideas, I evaluated the ideas on a scale from 1-5 based on personal interest, business potential, and “MVP-ability”. Don’t let the numbers fool you, this is purely subjective and based solely on my estimation. The quantification is just a way of forcing myself to be explicit.

Dipping the toe

The lucky winner was an idea about a Slackbot connecting analytics tools with Slack. Working data-driven as a company is challenging and the hypothesis was that a more push-based KPI communication would help with this. The Slackbot would allow sending pre-defined templates of the main KPIs and visualizations to Slack Channels regularly.

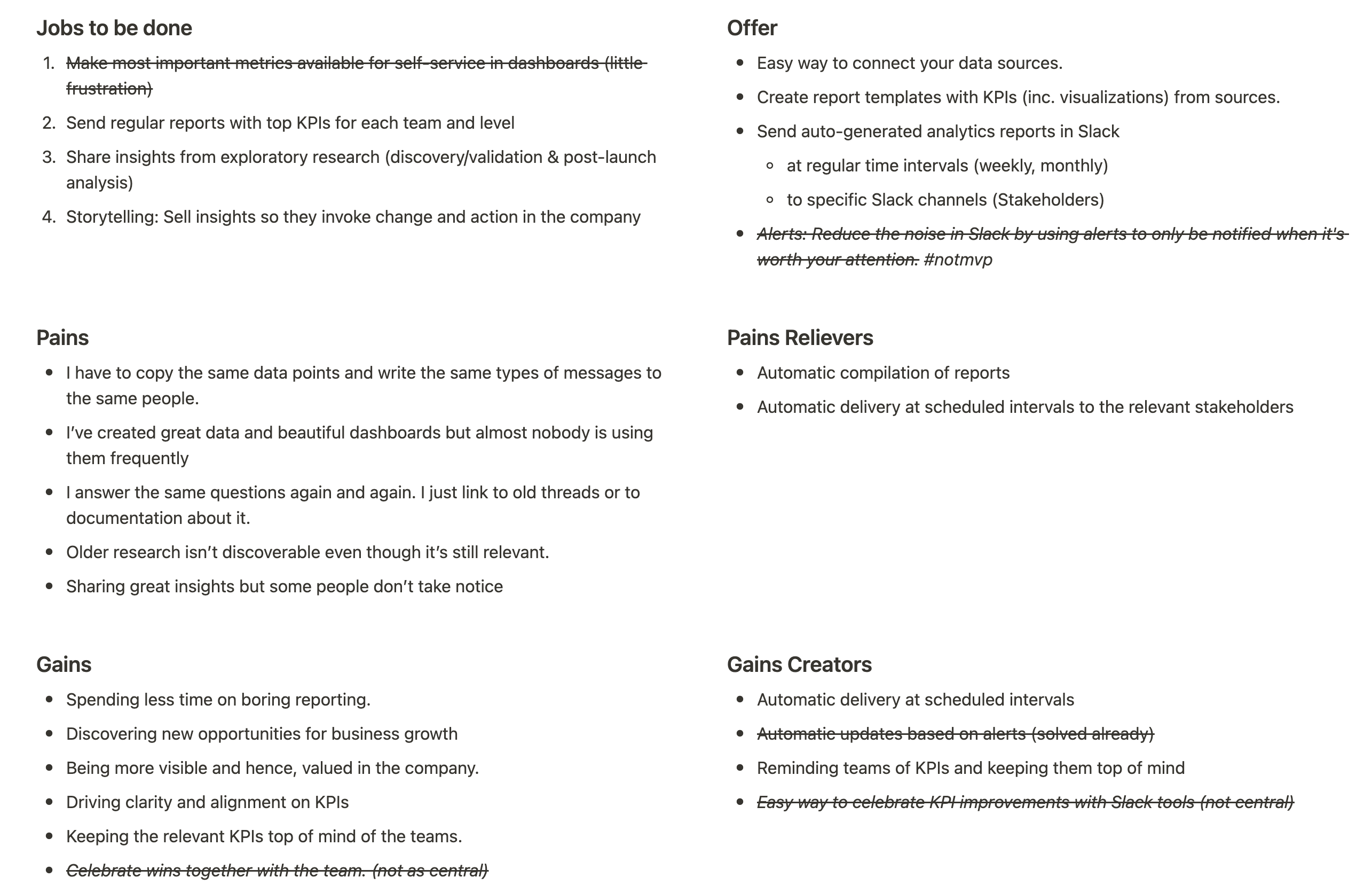

But first, I took a step back and mapped out the jobs, pains, and gains of what I thought was the potential customer for this solution: Data and product analysts. On the right side, I put the value proposition of the Slackbot solution I had in mind. This is based on the Value Proposition Canvas by Strategyzer.

Diving into the deep end

At this point, I only had a limited understanding of how these roles work based on my experience from my previous jobs. I had to check if my hallucinations about their jobs, pains, and gains checked out in the real world.

So I talked to some Data and Product Analysts I knew. The understanding and insights I got from them went directly into the doc and in turn, led to changes in the value proposition. Unsurprisingly, many of my assumptions were wrong or at least inaccurate and I learned quite a lot from these conversations.

During this time I also did a competitor analysis and found almost the exact idea, which was called datacard.io! However, it didn’t exist any longer. The last activity on the Socials was around 2017. So very old news, but I needed to know why this didn’t work back then. So I found out who built it and contacted him. He was kind enough to have a chat and talk about the experience.

It turned out that the tool worked, he had a loyal customer base and even was the Nr. 1 Analytics Slack app for a while. What didn’t work was monetization. The willingness to pay wasn’t where he wanted it to be and so he ditched the project. This was a potential risk to keep in mind.

Initial research on forums like Reddit or other analytic tools showed that some people were looking for the functionality I was trying to build with a bit of engagement from the community. This didn’t hint towards this idea being a blockbuster hit but that there might be a niche of people that wanted it.

So now I had a lot of contextual understanding of the potential customer, some initial evidence of the desirability of the idea, and a potential viability risk.

Going forward

The next thing to test was to dive deeper into the desirability of the idea because if people don’t want it, everything else doesn’t matter. To do this I created a landing page as if the product already existed, wrote some copy that was inspired by the talks I had with data analysts, and added some Figma mockups of how the product could look in action.

Next, I went on Linkedin and reached out to product analysts in startups and scale-ups (where I thought the biggest potential for the idea was). Despite 90% of people declining or ignoring my ask, I found some people to talk to (most of them through the networks of other people). This is always my least favorite part and I learned just how much it pays to have direct access to the customer group you want to target.

I showed them the prototype and got feedback. It was generally positive, not overwhelmingly so, but good. At this point, nothing would’ve spoken against building a first MVP version (or maybe doing some fake-purchase tests before to test monetization). However, I didn’t pull through and dropped the idea in the end.

Why?

Because it turned out that Looker by Google already had most of the functionality I wanted to build. I missed the tool in my initial research but looked it up after it came up in several interviews. The competition was already a bad sign but might not have been enough for me to drop this, since micro SaaS lives from niches and specific use cases that the big ones can’t or won’t cover.

What led me to drop it was the fact that none of the interviewees knew about the feature in Looker. This was evidence for me that despite the feedback from interviews, the pain wasn’t there. Or at least it wasn’t big enough to do something about it. Combining that with the monetization risk (which of course would also be related) led me to conclude that pursuing this further wasn’t a good bet anymore.

Iterations

I didn’t ditch the product analytics niche completely at first. I’ve gathered some good insights and iterated the idea two times more but those didn’t work out either.

One iteration went more into a tool to build and collaborate on KPI trees to align an organization around data. The second was about offering analytic event tracking plan templates and no-code implementation tooling. Both didn’t pass validation either.

Conclusion and Learnings

What did I take away from this journey?

First, validation is so much easier if you’ve got direct access to the target persona. This seems quite obvious and wasn’t new to me either, but I didn’t grasp how much harder validation is when this isn’t the case.

Second, if you have a concrete idea skip the exploration interviews and move directly to other testing methods. Even though I learned a lot in the initial interviews with data and product analysts, the learning was considerably higher in those where I brought a landing page or mock-up. When you already have an idea, just test it. If it doesn’t hit the mark you can still switch to exploration again.

Third, beware of the sunk cost fallacy. On the one hand, it made sense to keep iterating on the idea because I’ve gained an understanding of the target persona. But to be honest, my heart wasn’t in it anymore after the second iteration and I just continued because I’ve already spent so much time on the topic. In these situations, it’s best to move on.

Now I’m back where I’ve started, generating ideas and going through the process again… and probably again… until something works.

It’s all part of the game. Let’s keep building.